"To prevent AI models from memorizing their input, we know exactly one robust method: differential privacy (DP). But crucially, DP requires you to precisely define what you want to protect. For example, to protect individual people, you must know which piece of data comes from which person in your dataset. If you have a dataset with identifiers, that's easy. If you want to use a humongous pile of data crawled from the open Web, that's not just hard: that's fundamentally impossible.

In practice, this means that for massive AI models, you can't really protect the massive pile of training data. This probably doesn't matter to you: chances are, you can't afford to train one from scratch anyway. But you may want to use sensitive data to fine-tune them, so they can perform better on some task. There, you may be able to use DP to mitigate the memorization risks on your sensitive data.

This still requires you to be OK with the inherent risk of the off-the-shelf LLMs, whose privacy and compliance story boils down to "everyone else is doing it, so it's probably fine?".

To avoid this last problem, and get robust protection, and probably get better results… Why not train a reasonably-sized model entirely on data that you fully understand instead?"

https://desfontain.es/blog/privacy-in-ai.html

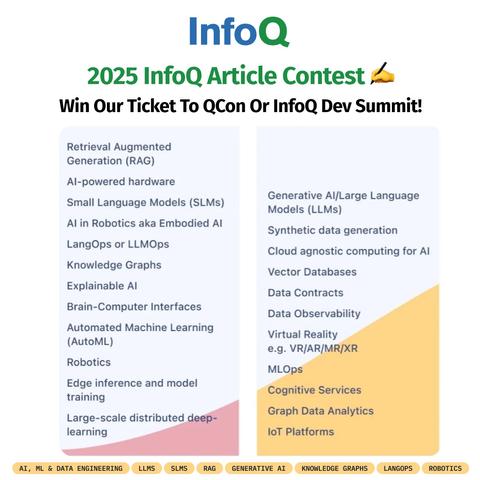

Join the #InfoQ Annual Article Writing Competition!

Win a #FreeTicket to #QCon or #InfoQDevSummit!

Submit by March 30, 2025: https://bit.ly/417KPtk